Task Automation in Fedora QA

Tim Flink

2013/08/10

Fedora QA

In Case You're Wondering ...

The raptor is to fend off the sharks to whom I'm threatened to be fed if I didn't submit slides.

Outline

- Introduction

- Where are we?

- Where are we going?

- How are we going to get there?

- How can you help?

- Questions

Who is this guy?

- Works for Red Hat, allocated to Fedora QA

- AutoQA is part of that work

- Spent 2.5 years developing a system-level test automation system for a large technology company.

Why should we listen to him?

- Has spent enough time with AutoQA and its associated tests to know where most of the biggest problems are.

Why are we talking about task automation?

- There are plenty of "tasks" which we're interested in but aren't always "tests"

- create a boot.iso with every anaconda build

- upload/build a new cloud image and test it

- run static code analysis

This ends up being a mix of CI and traditional test automation concepts.

While static analysis is traditionally in the realm of devel, we don't really have as much of a place to run it in fedora devel and I really don't see a reason not to run it as QA

What is the Problem Here?

We have AutoQA, isn't that good enough?

My Answer: No

AutoQA is a great idea but there are some issues with its implementation which have hampered its expansion beyond the initial deployment.

Which is not to say that there is nothing positive or good about AutoQA. It's just time to retire and replace it with a system which shows what we've learned in the initial system.

Issue 1: Tight Coupling

AutoQA is a complex beast that interacts with both koji and bodhi in the process of determining which jobs to run and setting up those jobs. The tight coupling that ended up in the code base makes development much more difficult than it needs to be.

Issue 2: Accepting Contributions

- Setting up an AutoQA development environment is way more difficult than it should be which makes the bar for contributions much higher than it should be

- Any test changes require an update to the entirety of AutoQA from the watchers to the scheduling logic to the base libraries

Issue 3: Results and Reporting

- AutoQA generates a lot of data, most of which goes unread due to lack of human eyeball-hours

- Automated/AI analytics are difficult at best due to the logging and reporting setup that we currently have

- Current methods of notifying potentially interested parties are not adequate

This doesn't mean that what we have is crap, just that it isn't enough to do the kind of analytics that I would like to see us doing in the future

There have been interesting proposals in the fedbus area with centralizing email code through messages - will be worth looking into as we go forward.

Issue 4: Scaling

- Autotest isn't scaling well for us as it's currently have it configured

- There are issues both with our setup and autotest. Some of these things should be improved in later versions of autotest.

I don't want people to read this and say "oh, autotest can't scale" which isn't all that true. I'm emphasizing that part of the problem is how we have our database set up.

There has been work to improve the database queries in Autotest as of late and we are not using the latest version - please don't come out of this with the idea that autotest has scaling problems and therefore shouldn't be used.

You've Convinced me, let's throw it all out!

No, let's not. That would be a bad idea.

Sure, let's "throw out the baby with the bathwater". That sounds like an incredily effecient use of time :-/

I actually suspect that nobody is going to actually suggest we throw it all out.

But it needs to be changed!

- There are lots of good things and difficult problems already solved in AutoQA. Getting rid of everything would be akin to "throwing the baby out with the bathwater"

- Want to keep the good bits while reworking or throwing out the stuff that needs to change

Where are we going?

Design Philosophy

- I assume that neither I nor anyone else is smart/clarvoyant enough to know exactly what's going to be needed 1 year from now.

- So let's design to maximize flexibility instead of pretending to be able to get everything right the first time

There is a point of diminishing returns on flexibility, though and I'm not suggesting that we go past that - just making things as flexible as possible without getting crazy about it.

Requirements

- Tasks should be easily executable outside the production environment

- Dev environments should be trivial to setup

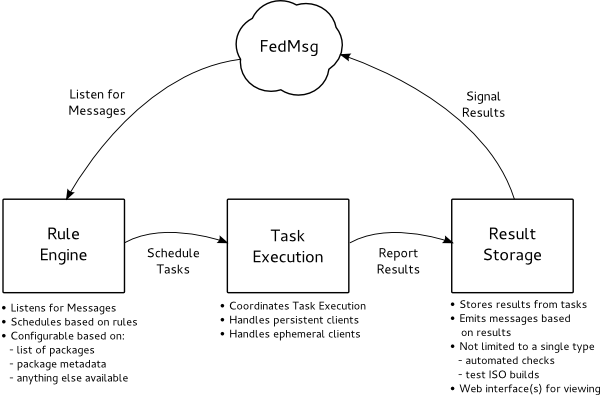

Input

This could listen to the raw fedmsg stream or continue to poll bodhi/koji as we have been doing in AutoQA. Will need to talk with fedmsg folks about whether an eventually somewhat critical piece of infra should be relying on fedmsg.

Either way, I suspect that we'll need the ability to fill in gaps with data from datanommer or straight koji/bodhi.

Rule Engine

The rule engine, while simple at the moment, will hopefully become more complicated as time goes on. We'll have to figure out a better way as time goes on.

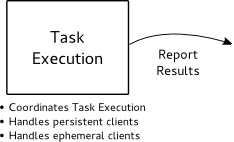

Task Execution

This has received the most suggestions as to which tool/toolset should be used. The evaluation is ongoing but for now, we're using buildbot.

Result Storage

The proof of concept is using some code that I slapped together as a trial for different libraries - it will eventually be replaced with something more capable.

Design Summary

- Each part of taskbot could be replaced with minimal pain

- A wide variety of tasks could be supported

How are we going to get there?

Phased Approach

- initial execution and basic status recording

- analytics

- more complicated execution/prep

Some examples of more complicated execution

- installation testing

- upgrade testing

- DE graphical testing

Plans for the Immediate Future

- Figure out task description

- Get a better plan for recording status and log storage

- Get an initial system deployed in fedora infrastructure

How can I help?

Currently Needed Help

- ideas for things that you'd be interested in helping write and support to make sure that we're not leaving important use cases out

- not promising to support everything but would like to not leave out low hanging fruit just because it wasn't thought of